Sarah Ponczek and Luke Lloyd discuss their tech and A.I. outlooks, the impact on airfreight and shipping rates from Shein and Temu, and the Feds handling of rates.

The Federal Communications Commission (FCC) announced a new proposed regulation that would require the use of artificial intelligence (AI) in broadcast TV and radio ads to be disclosed to the audience through an on-air announcement.

FCC commissioners voted 3-2 on Thursday to advance the proposed rulemaking to the public comment stage as it seeks the public’s feedback on the proposal before the regulation is finalized. It’s unclear if a final version of the rule will take effect before the November elections, although the FCC has suggested that it aims to finalize the rule by that date.

“Facing a rising tide of disinformation, roughly three-quarters of Americans say they are concerned about misleading AI-generated content,” FCC Chairwoman Jessica Rosenworcel said in a statement. “Today the FCC takes a major step to guard against AI being used by bad actors to spread chaos and confusion in our elections.”

“Voters deserve to know if the voices and images in political commercials are authentic or if they have been manipulated,” she added. “To be clear, we make no judgment on the content being shared or prevent it from airing. This is not about telling the public what is true and what is false. It is about empowering every voter, viewer, and listener to make their own choices.”

FCC’S PROPOSAL TO REGULATE AI IN POLITICAL ADS IS MISGUIDED, COMMISSIONER SAYS

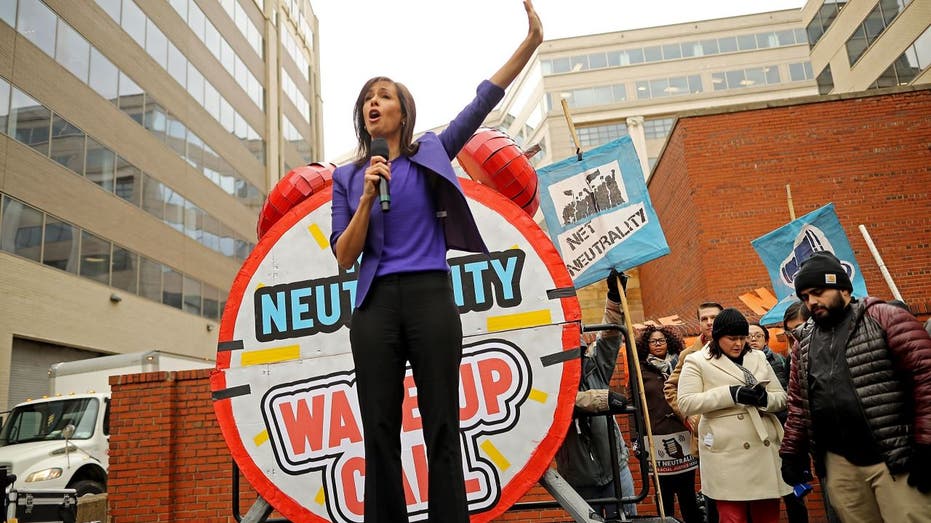

FCC Chairwoman Jessica Rosenworcel said the agency’s proposed AI disclosure rule for political ads would prevent bad actors from using the tool to sow chaos. (Joan Cros/NurPhoto via / Getty Images)

Under the rule, AI would be defined as “an image, audio, or video that has been generated using computational technology or other machine-based system that depicts an individual’s appearance, speech or conduct, or an event, circumstance, or situation, including, in particular, AI-generated voices that sound like human voices, and AI-generated actors that appear to be human actors.”

“We believe that this definition would adequately encompass content artificially created for use in political advertising,” the FCC wrote, while noting it is seeking comment on the definition and is inviting commenters to offer alternative definitions.

MOST AMERICANS EXPECT AI ABUSES TO IMPACT 2024 ELECTION: SURVEY

Rosenworcel and the FCC’s two other Democratic appointees advanced the proposed rule on a 3-2 vote. (Chip Somodevilla / Getty Images)

Political advertisements made with the use of AI would be required to include an on-air announcement immediately before or after the ad that states, “[The following] or [This] message contains information generated in whole or in part by artificial intelligence.”

The disclosure could be communicated verbally in a voice that is “clear, conspicuous, and at a speed that is understandable” or visually with “letters equal to or greater than four percent of the vertical picture height for at least four seconds.”

Commissioner Brendan Carr was one of the two votes against the FCC’s proposal to regulate AI and noted in his dissent that the agency is overstepping its authority with the rule and that the Federal Election Commission (FEC) is warning the FCC’s proposal intrudes on its jurisdiction as it considers a similar regulation.

WHAT IS ARTIFICIAL INTELLIGENCE (AI)?

Commissioner Brendan Carr criticized the FCC’s proposal to regulate AI in political ads, arguing it’s outside the agency’s purview and comes in the middle of the election season. (Chip Somodevilla / Getty Images)

Carr said the FCC has “stated its intent to complete this rulemaking before Election Day” and that the “comment cycle in this proceeding will still be open in September – the same month when early voting starts in states across the country.”

“This is a recipe for chaos. Even if this rulemaking were completed with unprecedented haste, any new regulations would likely take effect after early voting already started. And the FCC can only muddy the waters,” he explained.

“Suddenly, Americans will see disclosures for ‘AI-generated content’ on some screens but not others, for some political ads but not others, with no context for why they see these disclosures or which part of the political advertisement contains AI,” Carr wrote, noting that the FCC’s rule doesn’t apply to political ads using AI that air on streaming platforms or social media sites.

The FCC noted that the FEC is currently considering a petition to amend its rules to clarify that existing campaign law prohibits fraudulent misrepresentation by federal election candidates and their agents and that applies to AI-generated content in campaign ads or other campaign communications.

GET FOX BUSINESS ON THE GO BY CLICKING HERE

In early June, FEC Chairman Sean Cooksey warned the FCC that he believes the proposed rule “would invade the FEC’s jurisdiction.” Cooksey also urged the FCC to delay the effective date of the rule until after Election Day, Nov. 5, because if it takes effect during the election it “would create confusion and disarray among political campaigns, and it would chill broadcasters from carrying many political ads during the most crucial period before Americans head to the polls.”

Additionally, 11 states – California, Idaho, Indiana, Michigan, Minnesota, New Mexico, Oregon, Texas, Utah, Washington and Wisconsin – have enacted laws regulating AI-generated “deepfakes” in political ads and other campaign communications, while similar laws are under consideration in 28 other states.